Metrics design

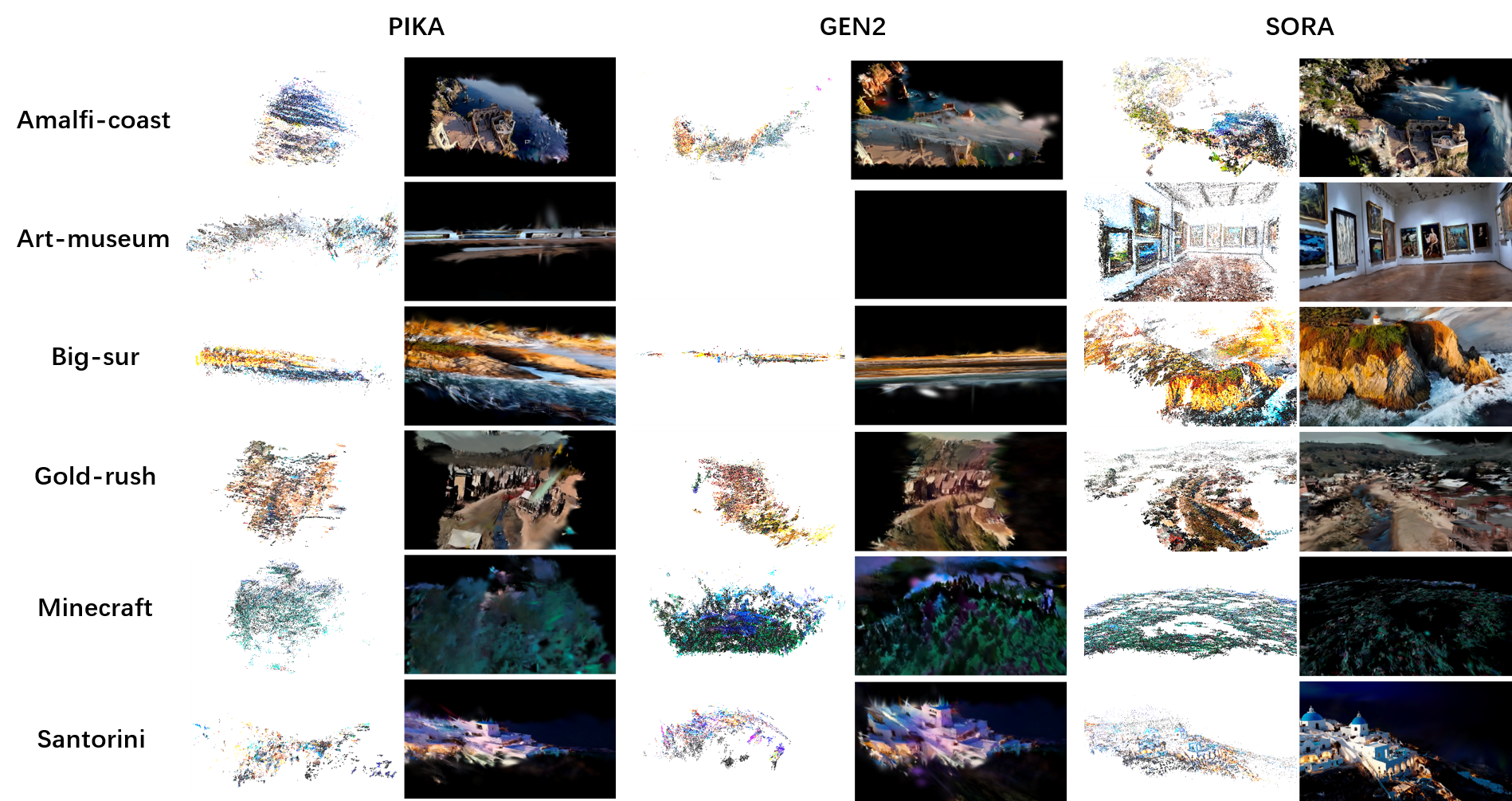

The foundational principle of SFM (Structure from Motion) [14] and 3D construction is multi-view

geometry, meaning that the quality of the model relies on two main factors: 1) The perspectives of the virtual video’s

observation cameras must sufficiently meet physical characteristics, such as the pinhole camera; 2) As the video progresses

and perspectives change, the rigid parts of the scene must vary in a manner that maintains physical and geometric stability.

Furthermore, the fundamental unit of multi-view geometry is two-view geometry. The higher the physical fidelity of the

AI-generated video, the more its two frames should conform to the ideal two–view geometry constraints, such as epipolar

geometry. Specifically, the more ideal the camera imaging of the virtual viewpoints in the sequence video, the more faithfully

the physical characteristics of the scene are preserved in the images. The closer the two frames adhere to ideal two–view

geometry, and the smaller the distortion and warping of local features in terms of grayscale and shape, the more matching

points can be obtained by the matching algorithm. Consequently, a higher number of high-quality matching points are

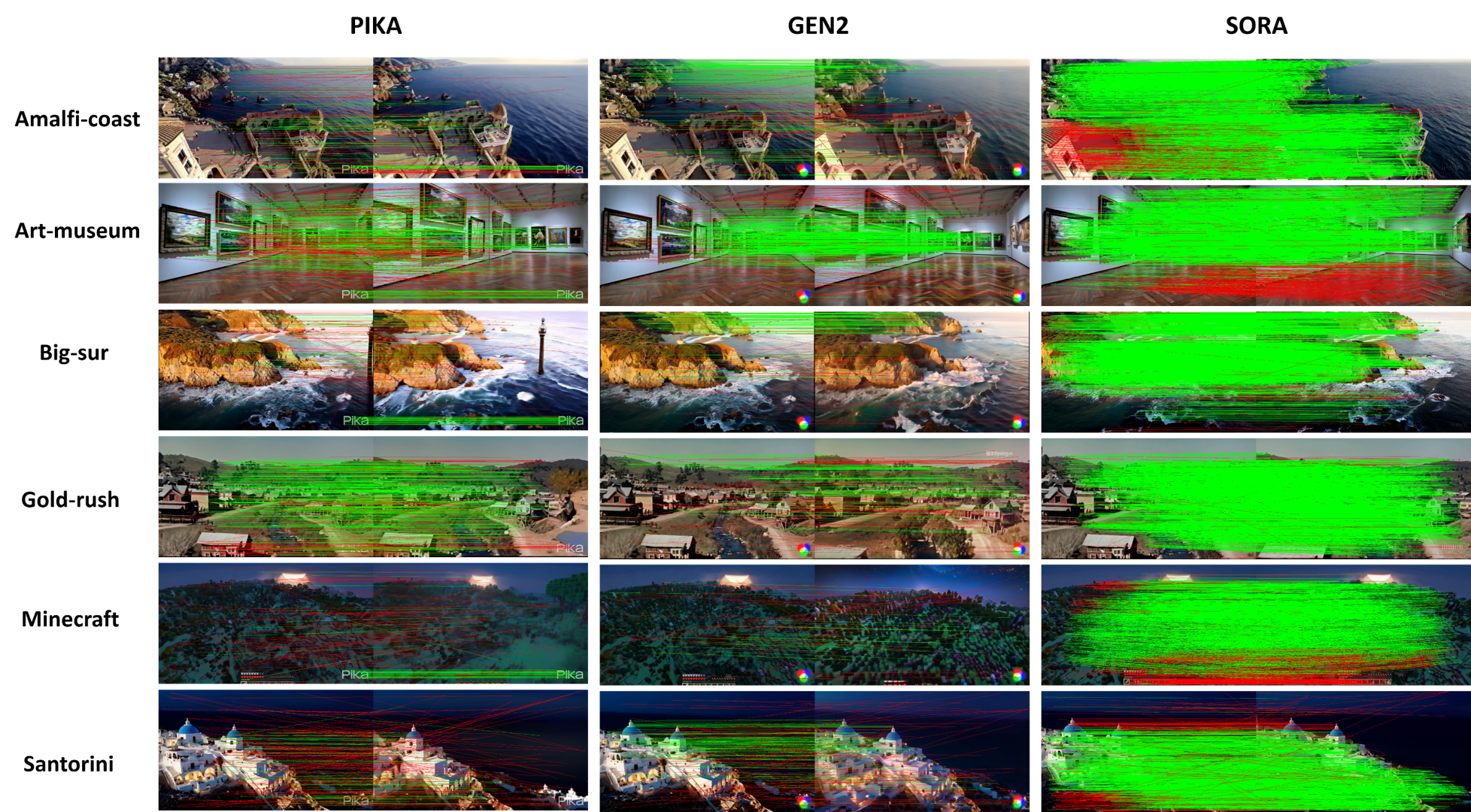

retained after RANSAC [19]. Therefore, we extract two frames at regular intervals from the AI-generated videos, yielding

pairs of two-view images. For each pair, we use a matching algorithm to find corresponding points and employ RANSAC

based on the fundamental matrix (epipolar constraint) to eliminate incorrect correspondences.

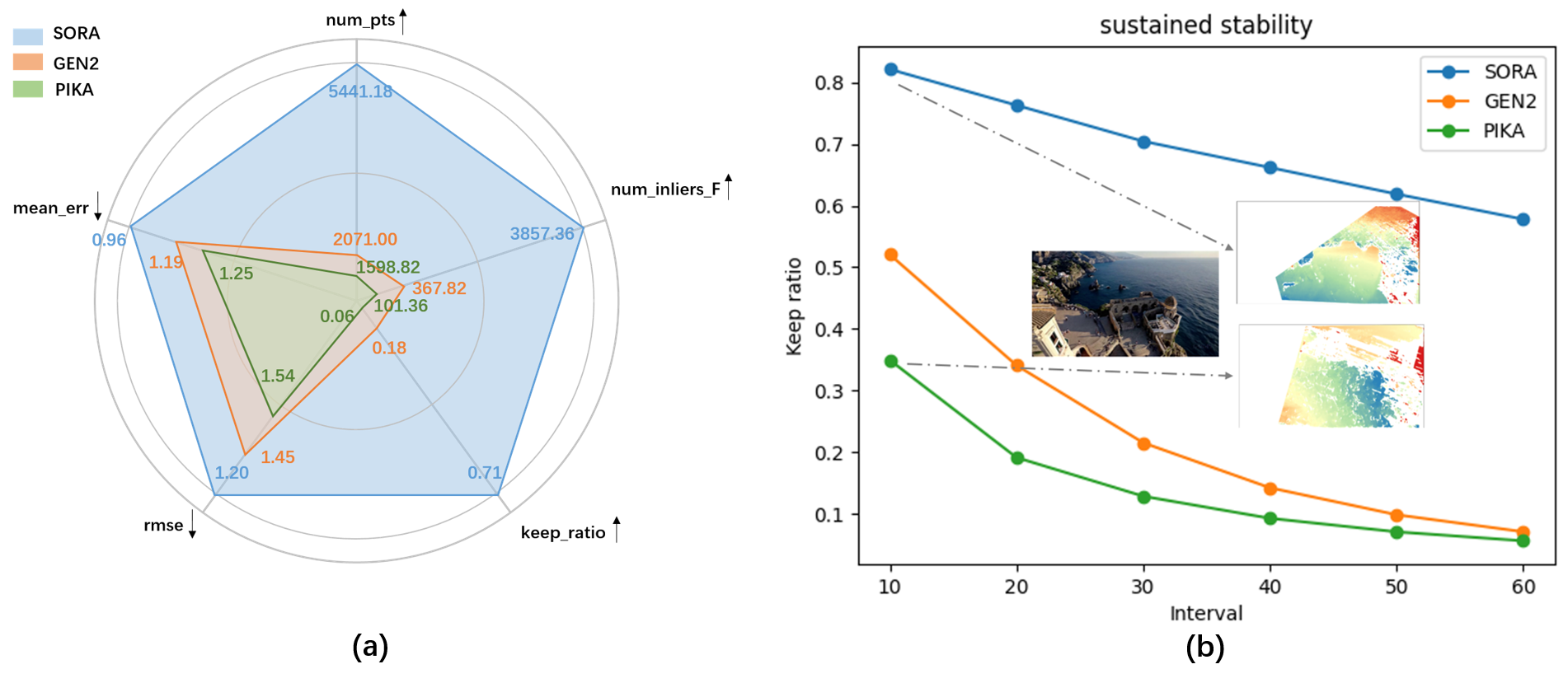

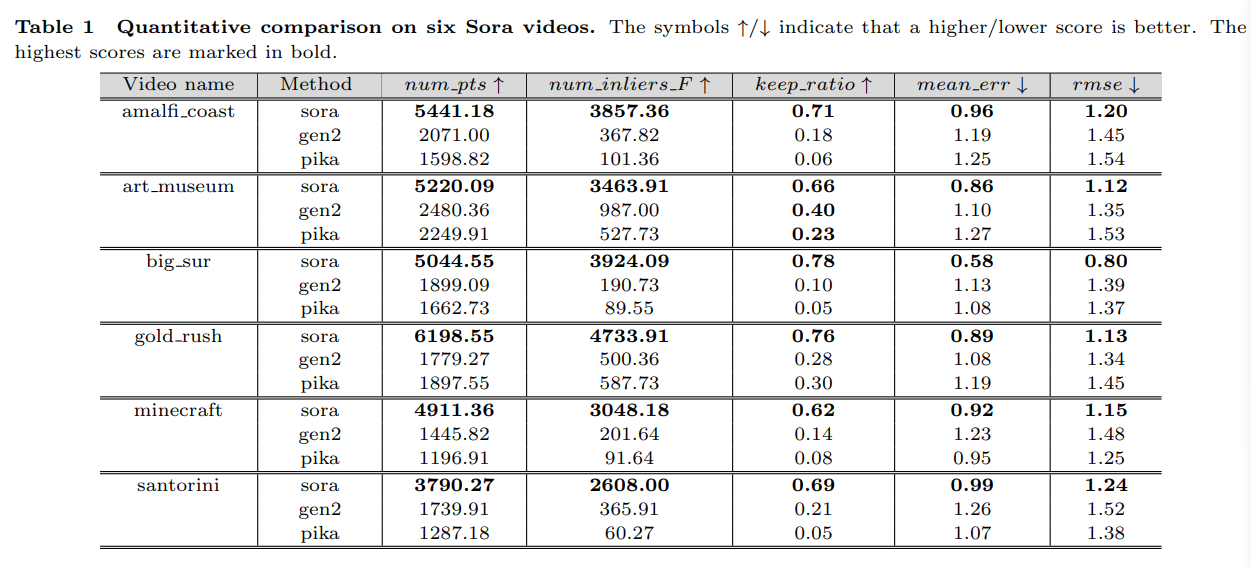

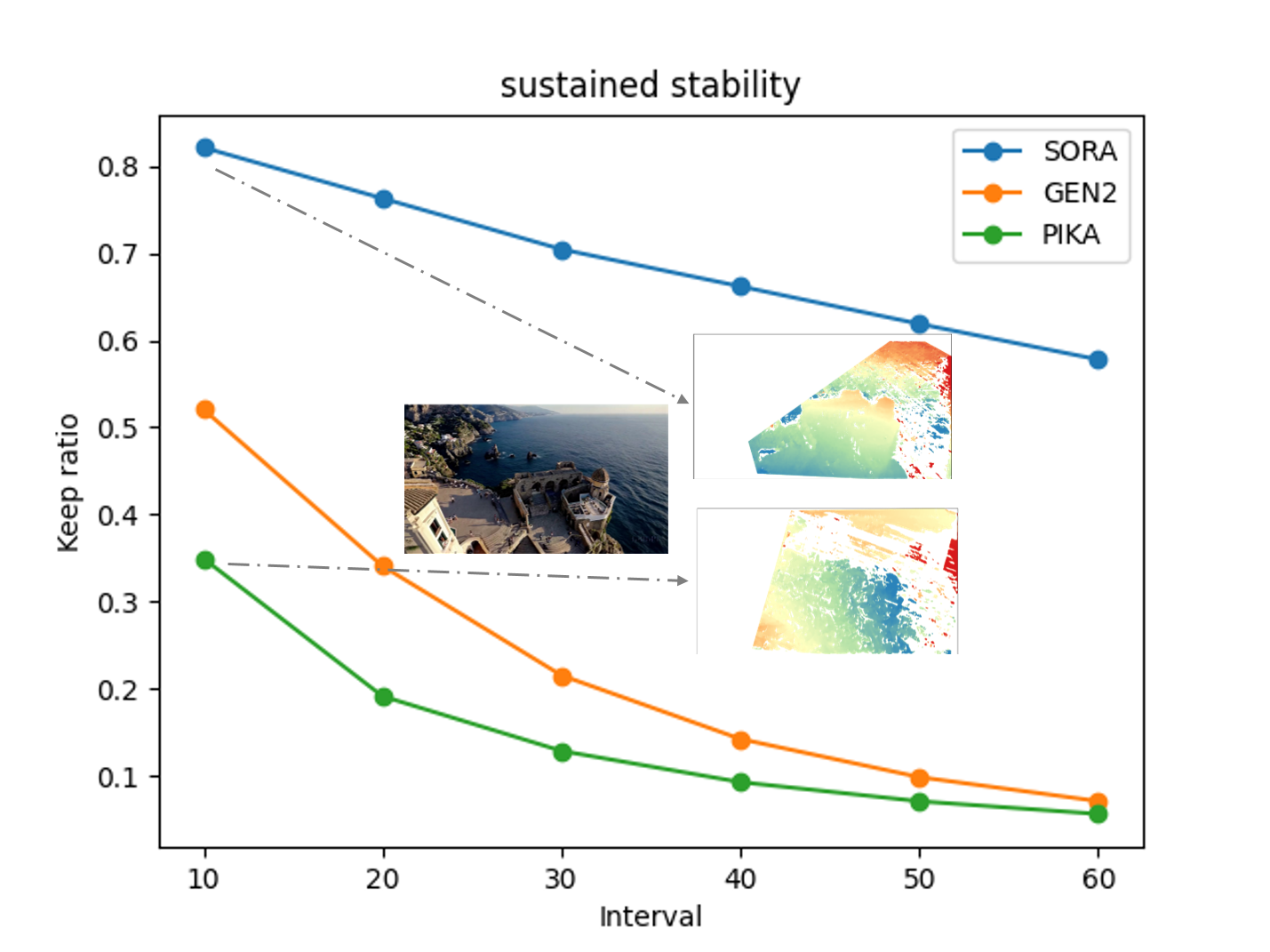

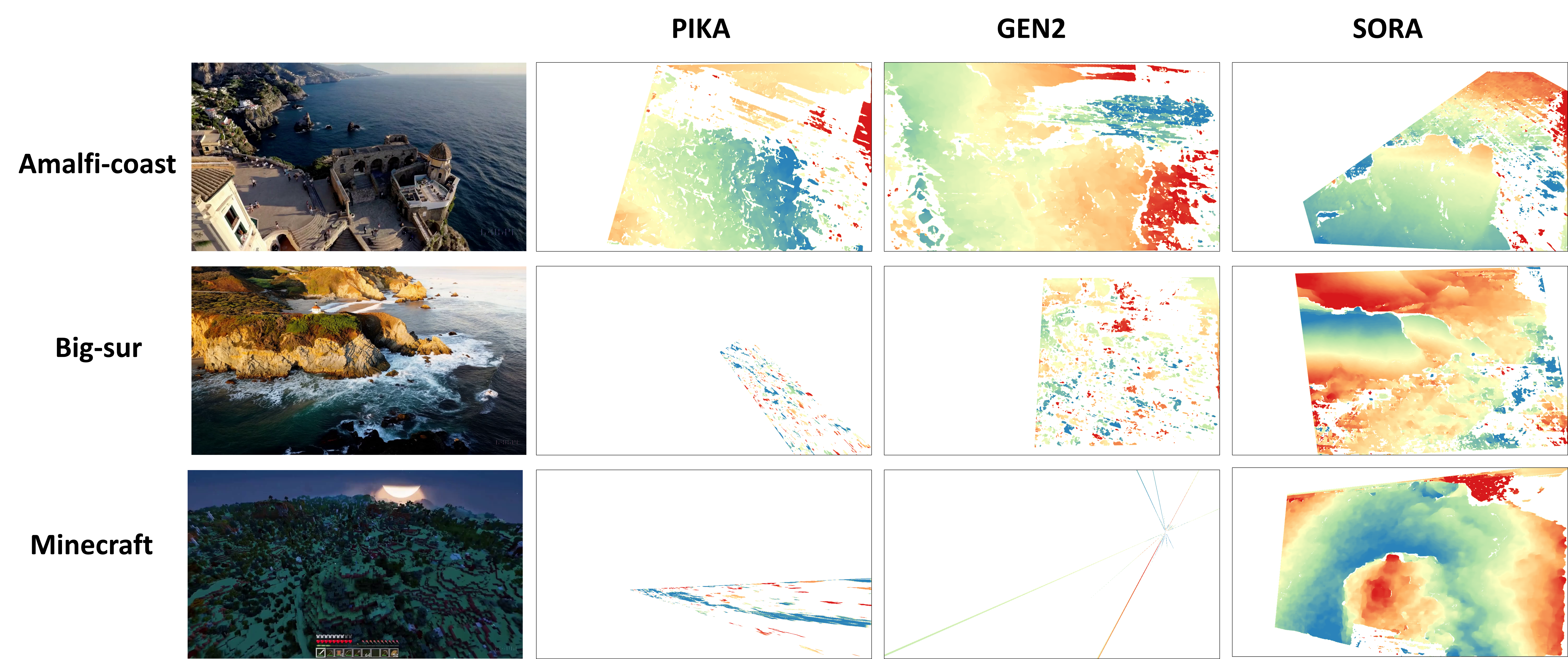

After elimination, we calculate the average number of correct initial matching points, the average number of retained

points, and the average retention ratio. Therefore, we have the following metrics: num pts refers to the total number of

initial matching points in the binocular view, and num inliers F refers to the total number of matching points retained

after filtering. The keep ratio is obtained by the ratio of num inliers F to num pts. Additionally, for each pair of images,

we calculate the bidirectional geometric reprojection error for N matching points per point of the F matrix, d(x, x′). x and

x′ are the matching points retained after RANSAC and d is the distance from a point to its corresponding epipolar line.

Finally, we perform an overall statistical analysis of all data to calculate the RMSE (Root Mean Square Error) and MAE

(Mean Absolute Error).